TLDR: In mid-2023, a doctor friend and I built an AI RAG chatbot for emergency medicine as a side project. Over 2+ years, it answered 14,000+ questions from 500+ users and real doctors, generated 1,500+ patient instructions, all before vibe coding!

Table of Contents

From Prototype to Real Users (Before Vibe Coding)

In June 2023 BC (before-Claude), about 7 months after ChatGPT was released, an emergency medicine resident friend and I decided to run an experiment.

Why Build This?

Emergency physicians face a constant challenge: staying current with rapidly evolving medical guidelines while working high-pressure shifts. My co-founder would often pull out their phone mid-shift to search UpToDate or Google, wading through dense text to find a quick answer. We thought: what if we could build something that understood medical context and gave fast, cited answers from trusted sources like FOAMed?

It wasn’t about building a company—it was a “science experiment” to see what we could build with this “new” LLM technology. Looking back, we were building RAG (Retrieval Augmented Generation) workflows for medical guidelines that have now become ubiquitous 2+ years later.

The Investment

This was my “professional development” budget—learning by doing. Over 2-3 years, we invested:

- $3,000-$4,000 in hosting and API costs

- Hundreds of hours outside our day jobs (as a data scientist and ER physician)

- Countless late nights debugging auth flows and optimizing retrieval

The ROI came in the form of deep technical learnings and seeing real users engage with something we built from scratch.

The Results in Numbers

We built the first prototype in a couple weeks using GPT-3.5-turbo (the state of the art at the time!), figuring out how to index FOAMed (Free Open Access Medical Education) content into early vector databases. We started with beta users then opened public sign ups in February 2024 and added patient instruction generation in January 2025.

The Experiment by the Numbers:

- 14,000+ questions answered

- 500+ user sign ups

- 1,500+ patient instructions generated

⚠️ DISCLAIMER ⚠️: this post is ~12 months delayed in being written so not much here is “state-of-the-art” anymore. Come along for the ride for how we got here but don’t expect cutting edge tech even if it “was” at the time.

With that out of the way, let’s dive in!

Quick & Dirty Prototyping

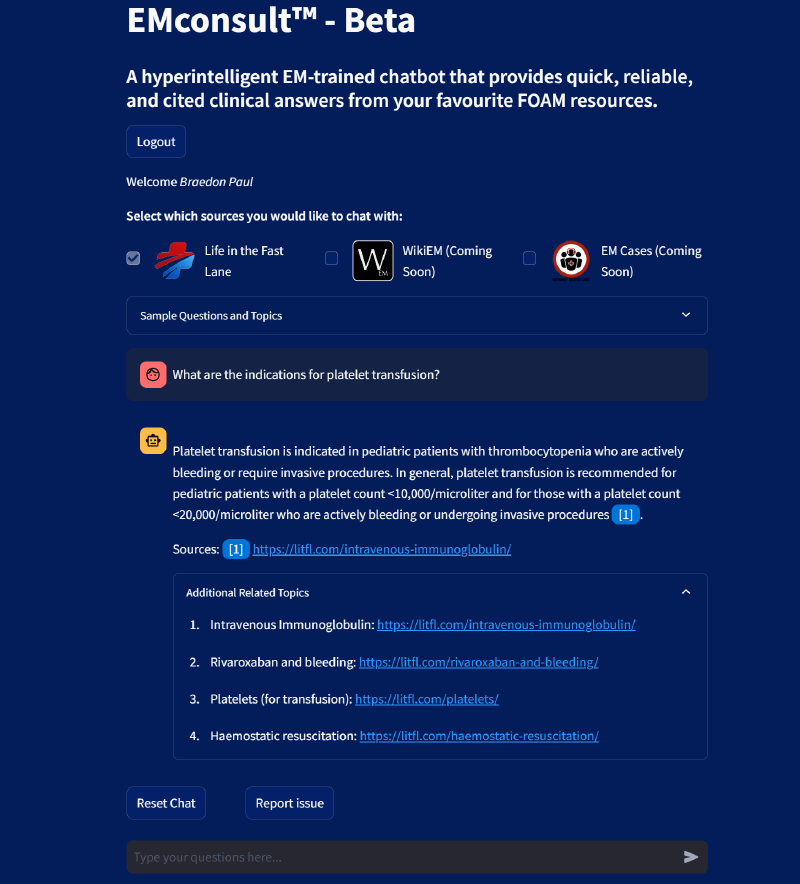

At this point, AI was helpful for coding but nowhere near what it is now. I built a first functional prototype over a couple of weeks using Streamlit, ChromaDB and OpenAI’s APIs. It ticked the boxes:

- Cited references in answers - LLMs can answer a lot of questions “correctly” but trusting the answer is hard without references

- Links to source documents - Being able to dig into the source material is key for clinicians

- Quick answers - EM physicians are busy, so speed matters

This is what the very first functional prototype looked like (initial project name idea was EMConsult):

The first functional prototype of EMChat, then known as EMConsult.

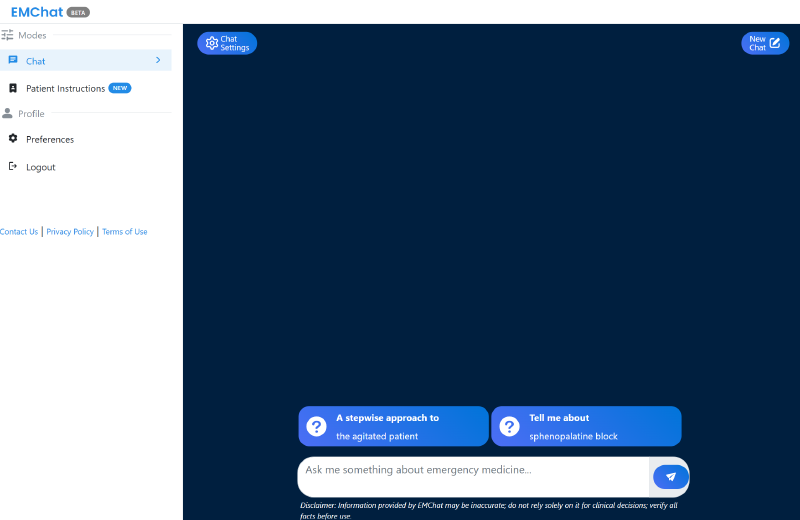

After a year of hacking away, learning auth flows, better models, retrieval optimizations, and deployment best practices, EMChat grew to look like this:

EMChat as of January 2026

Admittedly it hasn’t really been touched since ~January 2025 with the exception of cycling keys etc., but it’s still humming along!

You can try it out yourself at emchat.app (free to use with account signup).

Growth: Slow and Steady

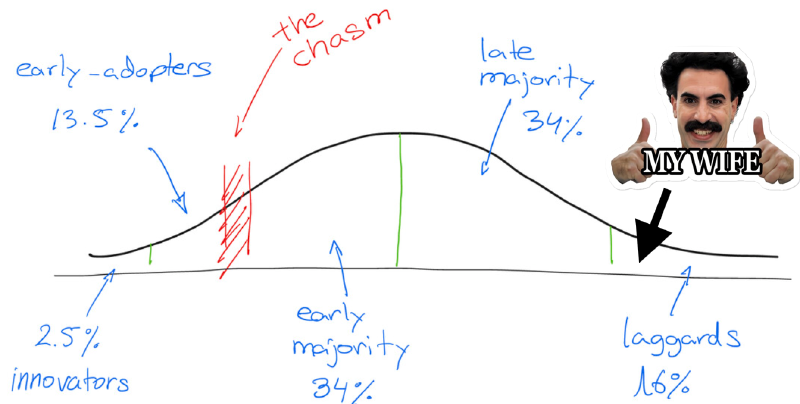

Getting users was an exercise in patience. It started slow. We tried Reddit ads for 4-5 months which drove signups, though a decent chunk of people never asked a question.

I thought my wife—an emergency physician—would be a guaranteed user. I was (almost) wrong. She is the definition of a late-adopter.

I almost had to torture her to try it on shift at first, but she ultimately tried it, used it more, and since has asked it 3,000+ questions over the past 2 years. A good litmus test for things to come!

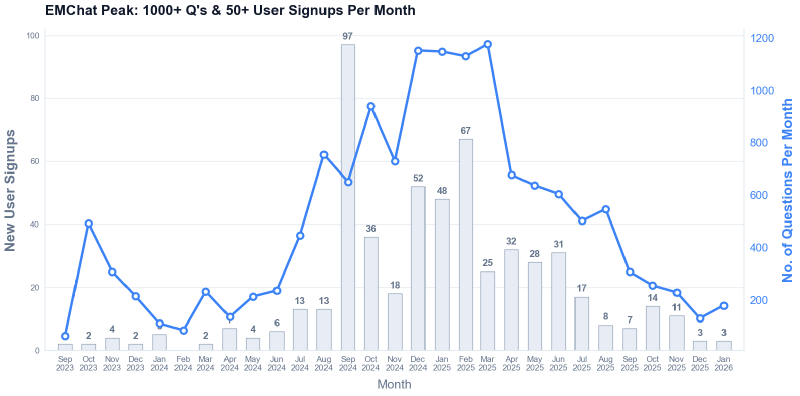

At it’s peak EMChat was getting 1000+ questions and 50+ signups per month.

Here’s what surprised us: even with zero promotion since ~Jan 2025, we’re still seeing signups and a couple hundred questions every month.

Organic growth continues post-advertising. The Reddit ads drove initial signups, but questions per month have remained stable even after spending dropped to zero.

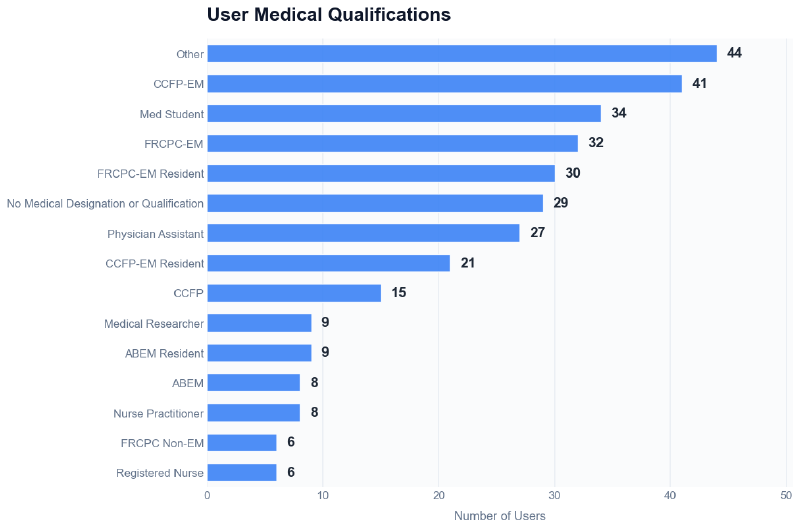

The user breakdown by medical qualifications revealed we were reaching the right audience: majority active physicians, residents, and physician assistants.

Breakdown of user qualifications showing the distribution of residents, physicians, and students.

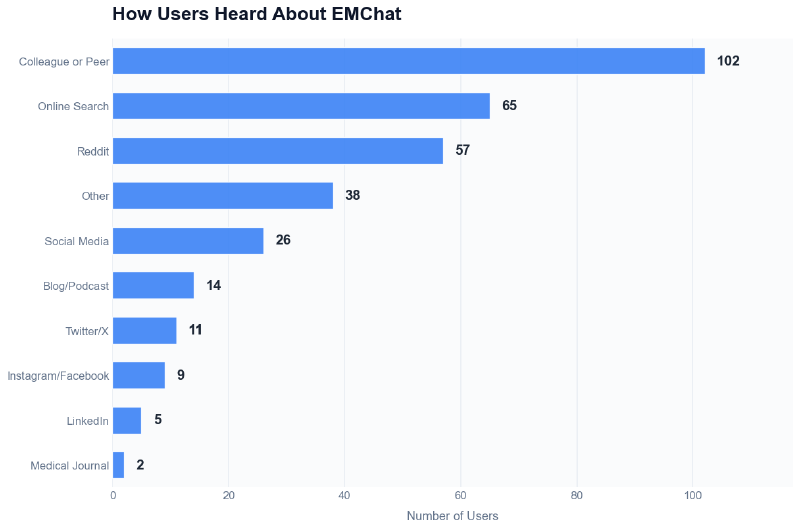

We had always known word-of-mouth within the medical community would be key, and we were able to confirm it when asking users how they heard about EMChat. We did have a surprising number of “organic” signups though! Still can’t find the medical journal that references us sadly.

How users heard about EMChat, highlighting the importance of word-of-mouth within the medical community.

The Power User Effect

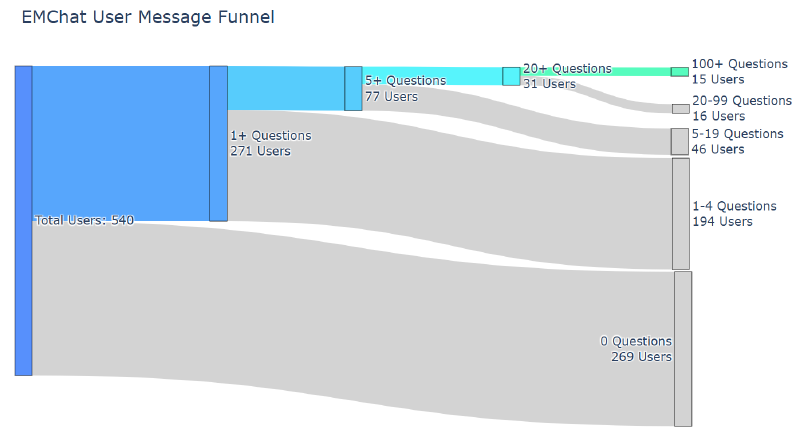

Most users signed up, asked a few questions, then dropped off. But 20-30 core users emerged, each asking ~50-100+ questions in a few months with some asking 1000+ over a year or more. These weren’t tire-kickers - they were incorporating EMChat into their daily workflow.

The Sankey diagram below tells the real story: while many signed up and left, a core group of 20-30 users consistently asked 20-1000+ questions.

User engagement funnel. A core group of 20-30 power users emerged who integrated the tool into their regular workflow.

Sadly we never got to the bottom of why ~50% of users were signing up without asking any questions. Some were attributed to signup flow drop-offs after email confirmation, but many just signed up and never asked anything as far as we could tell from all our analytics. We tried to reach out to users but almost never got a response from those drop-off users.

Patient Instructions: The Unexpected Hit

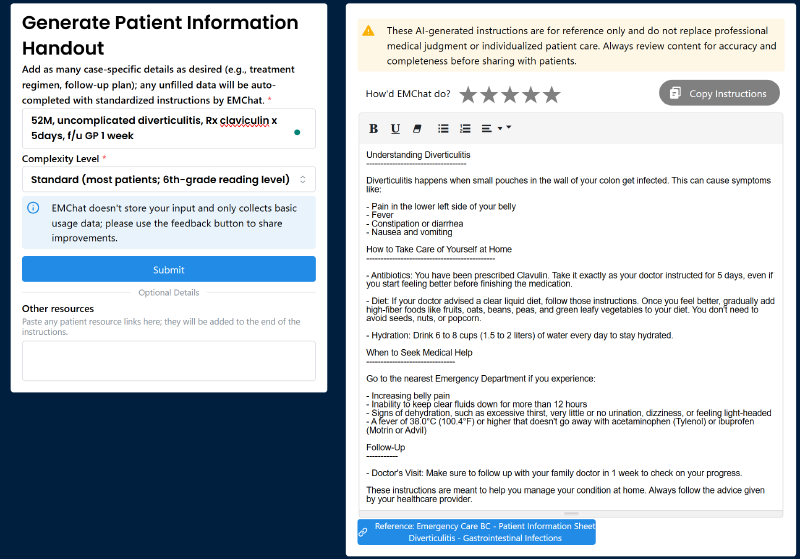

We launched patient instruction generation in January 2025, a tool that uses recommended patient discharge templates combined with patient specific instructions to adapt and personalize discharge paperwork for patients that often a doctor would only have 2-3 minutes to cover the essentials, not fully fleshed out, easy-to-understand instructions.

EMChat’s patient instruction generation interface.

Privacy and PII is a big concern for this, so even though we make it painfully obvious the physician should not enter an PII, we don’t log or store any of the inputs or outputs.

You might be asking, how do we know it was working? We did the obvious with “How did EMChat do?” with a score out of 5 stars the user could select to give feedback. Everyone who’s deployed this style of system knows user formal feedback drops off to nothing especially for busy users.

Our Solution: We ended up putting a “Copy Instructions” button above the editor and if a user clicked the button we track that click as a proxy for they likely found it useful.

Feedback mechanism for patient instruction generation and the ‘Copy Instructions’ button for tracking clicks.

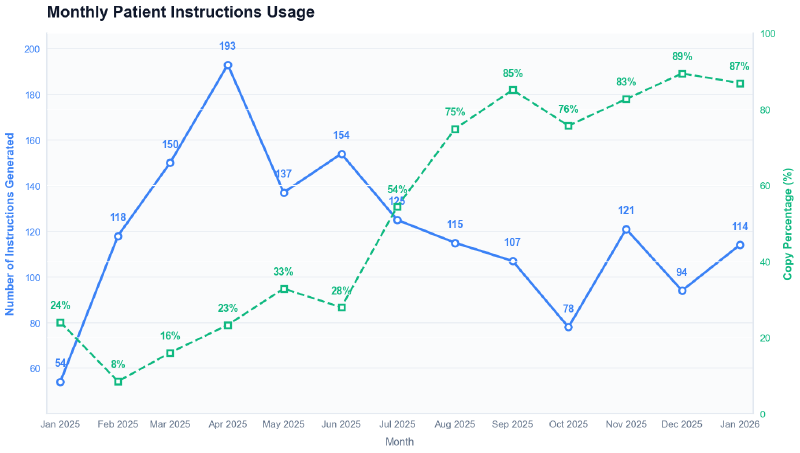

Over 90% of instructions are now being copied out of EMChat. That’s about as clear a signal as you can get that something’s working.

The chart shows something interesting - a small group of 5-10 users generates the majority of instructions, and they’re using it consistently month over month. These aren’t one-off experiments, they’re clinicians who’ve integrated this into their discharge workflow:

Monthly patient instruction generation with copy rates. The same power users appear each month, and the 90%+ copy rate indicates the instructions are actually being given to patients after the physician reviews/edits.

Medical professionals are tough critics and rarely give feedback unless something’s gratuitously wrong. Patient instructions? Average 4.5/5 stars from 35 reviews.

What We Learned

Building the thing is the easy part. Getting people to trust it, use it, and come back - that’s where the real work happens. The fact that EMChat continues to grow organically with zero marketing tells us we were solving a real problem. Current vibe-coding is making distribution the hardest part as now anyone can vibe-code EMChat in a weekend!

There are multiple companies like OpenEvidence, UpToDate, and every EMR provider jumping on the AI bandwagon, it’s clear that AI-assisted clinical decision support is here to stay but it’s not likely to be EMChat who “wins” in the end and that’s okay.

There were lots of technical learnings along the way too which I’ll be covering in a follow-up post so stay tuned!

Thanks for reading along this far!

Try EMChat yourself at emchat.app (free to use with account signup).